Is there any plan to support libcamera?

-

Hello,

Is there any plan to support libcamera for MIPI-IMX385 camera? If there is any beta test that I could join, I will be more than happy to be your tester.

Thanks, -

@thn

The main purpose of libcamera is to provide isp functionality, and our module includes isp. So essentially libcamera is not needed.

But libcamera-app and gstreamer's libcamera plugin do show me where its value lies.

I would really like to be compatible with libcamera, but I'm on a tight development schedule right now, so I'm still in the research phase and don't have a clear plan for development. -

I think libcamera supports GPU and it is more efficient than gstreamer on the Pi platform.

I will be happy to test out any new drivers that you have. Please contact me if you need helps testing.

Thanks, -

Hello,

I have a follow up question. Is it possible to run veye_raspipreview under Raspberry Pi OS 64 bit?

Thanks, -

I have a follow up question. Is it possible to run veye_raspipreview under Raspberry Pi OS 64 bit?

No, legacy mode can not run on 64 bit OS.

-

@veye_xumm said in Is there any plan to support libcamera?:

I have a follow up question. Is it possible to run veye_raspipreview under Raspberry Pi OS 64 bit?

No, legacy mode can not run on 64 bit OS.

If my application requires to be on Pi OS 64 bit, what would your recommendation for the most efficient way to access the IMX385 camera video frames in realtime. gstreamer is too slow. Is there an example in your toolkits?

Thanks, -

@thn

We don't offer a ready-made demo right now, but I can offer a suggestion.Since our module does not need to use the isp in the Raspberry Pi, there is no need to call libcamera.

libcamera-app is a useful toolkit to mimic this program and write a program that uses the v4l2 video source. -

@veye_xumm said in Is there any plan to support libcamera?:

@thn

We don't offer a ready-made demo right now, but I can offer a suggestion.Since our module does not need to use the isp in the Raspberry Pi, there is no need to call libcamera.

libcamera-app is a useful toolkit to mimic this program and write a program that uses the v4l2 video source.Actually we only need to capture the video frame do some processing with opencv and display it but it has to be on 64 bit platform. It would be best if your camera driver will support the libcamera. Right now the only way I can do that with your camera is to use opencv capture with gstreamer v4l2src plugin which is so slow and the delay is very long. Not acceptable for our application. We need realtime frame capture.

I have tried a simple V4L2 capture directly from linuxtv.org but it failed to start the stream. https://linuxtv.org/downloads/v4l-dvb-apis-new/userspace-api/v4l/capture.c.html

I am fine with using i2c to control the ISP I just need to capture the frames in realtime on 64-bit environment just like the veye-raspipreview code.Thanks,

-

@veye_xumm

Hello, I am very close to get the V4L2 API to work. After capture the frame and display with opencv I got a video like this

It looks like I am having a pixel alignment issue and I hope you could have some suggestion. Here is the portion of the C++ code that I used to convert into OpenCV Mat:

////

static void process_image(const void *p, int size)

{

int width=VCOS_ALIGN_UP(1920, 32);

int height=VCOS_ALIGN_UP(1080, 16);

Mat image = Mat((int)(height * 3/2), width, CV_8U, (uint8_t *)p, width);

cvtColor(image, image, COLOR_YUV2BGR_I420);

imshow("T6 Display", image);

waitKey(1);

} -

@thn

Are you using the V4L2 drive mode?

The data format you get directly from /dev/video0 should not be YUV420. I think it's in UYVY format, which means one pixel has two bytes.

You can get it byv4l2-ctl --list-formats-ext -

@veye_xumm said in Is there any plan to support libcamera?:

v4l2-ctl --list-formats-ext

Yes I use V4L2 API to access the IMX385 camera. And here is the formats command:

v4l2-ctl --list-formats --device /dev/video0

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture[0]: 'YUYV' (YUYV 4:2:2) [1]: 'UYVY' (UYVY 4:2:2) [2]: 'YVYU' (YVYU 4:2:2) [3]: 'VYUY' (VYUY 4:2:2) [4]: 'RGBP' (16-bit RGB 5-6-5) [5]: 'RGBR' (16-bit RGB 5-6-5 BE) [6]: 'RGBO' (16-bit A/XRGB 1-5-5-5) [7]: 'RGBQ' (16-bit A/XRGB 1-5-5-5 BE) [8]: 'RGB3' (24-bit RGB 8-8-8) [9]: 'BGR3' (24-bit BGR 8-8-8) [10]: 'RGB4' (32-bit A/XRGB 8-8-8-8) [11]: 'BA81' (8-bit Bayer BGBG/GRGR) [12]: 'GBRG' (8-bit Bayer GBGB/RGRG) [13]: 'GRBG' (8-bit Bayer GRGR/BGBG) [14]: 'RGGB' (8-bit Bayer RGRG/GBGB) [15]: 'pBAA' (10-bit Bayer BGBG/GRGR Packed) [16]: 'BG10' (10-bit Bayer BGBG/GRGR) [17]: 'pGAA' (10-bit Bayer GBGB/RGRG Packed) [18]: 'GB10' (10-bit Bayer GBGB/RGRG) [19]: 'pgAA' (10-bit Bayer GRGR/BGBG Packed) [20]: 'BA10' (10-bit Bayer GRGR/BGBG) [21]: 'pRAA' (10-bit Bayer RGRG/GBGB Packed) [22]: 'RG10' (10-bit Bayer RGRG/GBGB) [23]: 'pBCC' (12-bit Bayer BGBG/GRGR Packed) [24]: 'BG12' (12-bit Bayer BGBG/GRGR) [25]: 'pGCC' (12-bit Bayer GBGB/RGRG Packed) [26]: 'GB12' (12-bit Bayer GBGB/RGRG) [27]: 'pgCC' (12-bit Bayer GRGR/BGBG Packed) [28]: 'BA12' (12-bit Bayer GRGR/BGBG) [29]: 'pRCC' (12-bit Bayer RGRG/GBGB Packed) [30]: 'RG12' (12-bit Bayer RGRG/GBGB) [31]: 'pBEE' (14-bit Bayer BGBG/GRGR Packed) [32]: 'BG14' (14-bit Bayer BGBG/GRGR) [33]: 'pGEE' (14-bit Bayer GBGB/RGRG Packed) [34]: 'GB14' (14-bit Bayer GBGB/RGRG) [35]: 'pgEE' (14-bit Bayer GRGR/BGBG Packed) [36]: 'GR14' (14-bit Bayer GRGR/BGBG) [37]: 'pREE' (14-bit Bayer RGRG/GBGB Packed) [38]: 'RG14' (14-bit Bayer RGRG/GBGB) [39]: 'GREY' (8-bit Greyscale) [40]: 'Y10P' (10-bit Greyscale (MIPI Packed)) [41]: 'Y10 ' (10-bit Greyscale) [42]: 'Y12P' (12-bit Greyscale (MIPI Packed)) [43]: 'Y12 ' (12-bit Greyscale) [44]: 'Y14P' (14-bit Greyscale (MIPI Packed)) [45]: 'Y14 ' (14-bit Greyscale) -

@veye_xumm

I will appreciate if you could give me an example of how to covert the V4L2 buffer into the OpenCV Mat BGR buffer. This way I could use your camera in my project.

Thanks, -

@veye_xumm

Hi again, finally I figured out how to convert the V4L2 frame buffer into OpenCV BGR Mat. Here is an example code in case anyone want to know. I uses COLOR_YUV2BGR_YUYV because I already used the i2c command to set the camera to YUYV.

The problem with this code is that the function cvtColor is very CPU intensive and it will bring the frame rate down to about 20 FPS. I hope there is a better way to use this camera in the Pi OS 64 bit mode.static void process_image(const void *p, int size)

{

int width=VCOS_ALIGN_UP(1920, 32);

int height=VCOS_ALIGN_UP(1080, 16);

Mat yuv = Mat(height, width, CV_8UC2, (uint8_t *)p, width);

cvtColor(yuv, yuv, COLOR_YUV2BGR_YUYV);

imshow("Display", yuv);

waitKey(1);

} -

@thn Hi, we are still experimenting with libcamera.

-

@veye_xumm

Please keep me posted if you have any new libcamera code that I could help testing them out.

Thanks, -

@thn

I would like to outline my thoughts here.

In the last few days I took a brief look at the code of libcamera and libcamera-apps.

They use the very new Morden C++ (C++17 I think), which causes me to read very slowly. C++ has changed so much over the years that I almost don't recognize it anymore.I don't guarantee that what I say is completely correct.

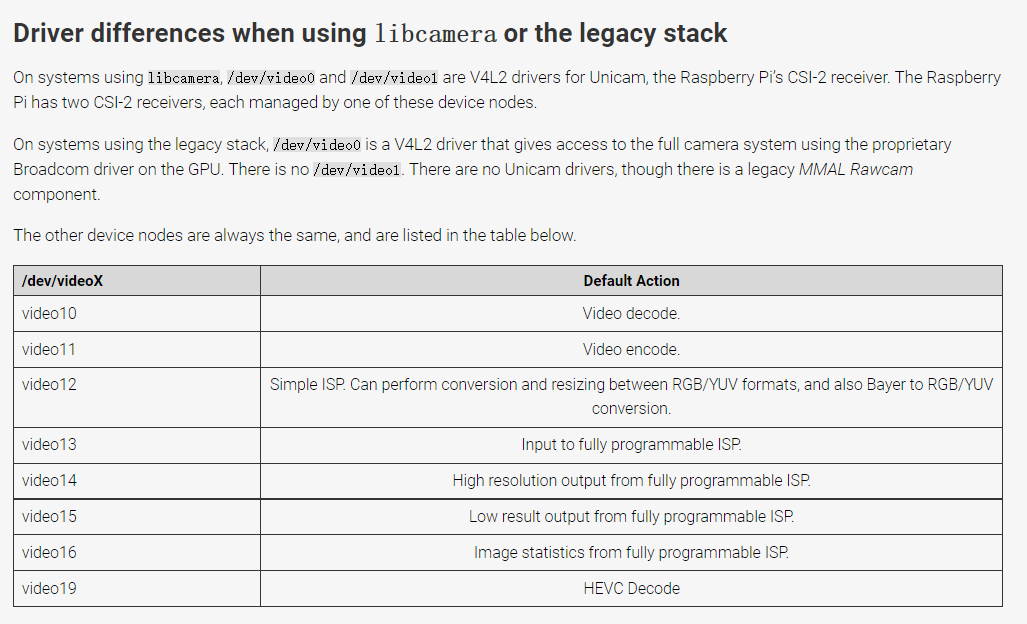

This diagram below is from the official Raspberry Pi documentation. It is the basis for our discussion.

The Raspberry Pi encapsulates the image processing functions of ipa and gpu to the linux driver level and provides application layer calls in the form of video node.

libcamera stack

This is the pipeline of libcamera :

sensor's bayer raw data from video0 ---> video13-----> [ISP functions]--->video14----->YUV420------> appslibcamera do not call video14 directly, It use Streams provided by libcamera.

The solution to use VEYE camera with libcamera I foresee

NO.1

Give up libcamera, because libcamera is essentially for isp service, VEYE camera has built-in isp function, so there is no need to use libcamera at all.

From /dev/video0 we can get the output data of camera directly, which is UYVY format.

UYVY format is very difficult to use, h.264 and preview components prefer yuv420. So video12 comes in handy.VEYE UYVY data from video0 ---> video12--->YUV420----->appsvideo12 uses gpu resources for format conversion, which is my recommended usage.

NO.2

NO.1 is a straightforward solution, but it actually removes libcamera-apps as an off-the-shelf package, unfortunately.

Another hacking solution is to create a pipeline that uses video12, inside libcamera.

Or, modify the existing pipeline to support UYVY mode input.

It seems to me that the Raspberry Pi organization has effectively refused to do so.Of course, the above discussion is all theoretical and a long way from code.

-

@veye_xumm

The obvious advantage for support libcamera is that the app can be compatible with other cameras that support libcamera. This could become a popular choice in the future. But who know!!!Right now I can use v4l2 API to access the camera /dev/video0 with UYVY format and then convert to BGR for OpenCV processing.

I check the format for /dev/video12 with

v4l2-ctl --device /dev/video12 --list-formats

The result is:

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture Multiplanar[0]: 'YUYV' (YUYV 4:2:2) [1]: 'YVYU' (YVYU 4:2:2) [2]: 'VYUY' (VYUY 4:2:2) [3]: 'UYVY' (UYVY 4:2:2) [4]: 'YU12' (Planar YUV 4:2:0) [5]: 'YV12' (Planar YVU 4:2:0) [6]: 'RGB3' (24-bit RGB 8-8-8) [7]: 'BGR3' (24-bit BGR 8-8-8) [8]: 'AB24' (32-bit RGBA 8-8-8-8) [9]: 'BGR4' (32-bit BGRA/X 8-8-8-8) [10]: 'RGBP' (16-bit RGB 5-6-5) [11]: 'NV12' (Y/CbCr 4:2:0) [12]: 'NV21' (Y/CrCb 4:2:0)So should I access /dev/video12 in my v4l2 app and request YUV 4:2:0 or even BGR3? Will your current driver support that?

Thanks, -

@thn I think video12 is just a converter, you can't access it directly to try to get camera data.

The correct way to do this is to access video0 and get the camera data, then input this data to video12 and then read the YUV420 from video12. -

@veye_xumm

That sounds like more works than just take video0 output and convert to YUV420 or BGR directly.

Also can we use two cameras? -

@thn said in Is there any plan to support libcamera?:

Also can we use two cameras?

Of course, this has nothing to do with software architecture. The cm series of Raspberry Pi provides 2 camera interfaces.